I want to learn more about how video codecs work so someday I can grow up to be a Jedi-master Video Engineer. To aid me on this epic quest, I'm doing the one week "mini-batch" at the Recurse Center. Here's my notes from Day 1.

Step 1 - Find a VP9 sample video

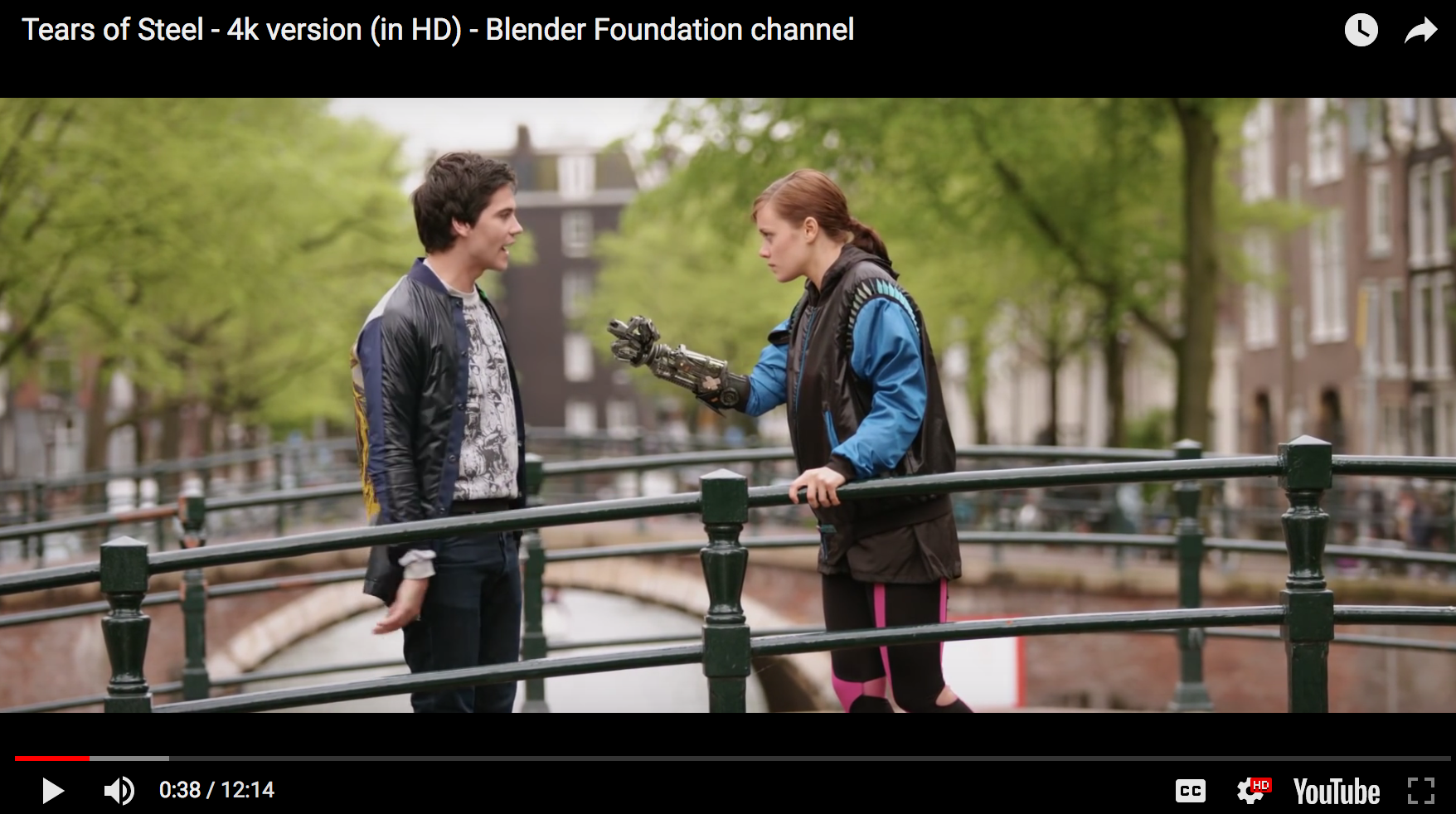

Tears of Steel is legendary for all the different kinds of lighting, motion, color, and uh...things one might need to compress with a video encoder. I've been wanting to play with that video for a long time. It's licensed as creative commons and made by the good people at Blender, and its actually pretty fun to watch. A quick search on duckduckgo shows you can download a webm version of the file. This is great news because webm videos use the vp9 codec, which is what I'm trying to use my weeklong training program at the Recurse Center in NYC to learn about.

Except that webm doesn't only mean vp9...it also means vp8. Time to transcode the video using ffmpeg to use vp9:

ffmpeg -i tears_of_steel_1080p.webm -c:v libvpx-vp9 -c:a libopus output.webm

-imeans input file-c:vspecifies the video codec for all streams in the file, andlipvpx-vp9means use the libvpx library's vp9 codec-c:ais the same thing, but for audiooutput.webmis the output file that ffmpeg will create. It infers lots of things from the fact I've used the .webm file extension

Oh man...ffmpeg is going so slow. The output looks like this:

frame= 7540 fps=3.6 q=0.0 size= 13211kB time=00:05:14.31 bitrate= 344.3kbits/s speed=0.15x

The video is 12 minutes and 14 seconds long, but I've already waited for at least an hour...

Two hours later...

I've retranscoded to vp9! I've managed to fix three bugs in my blog while waiting for the transcode to finish. If I had to guess, the job took so long because I didn't specify any quantization parameters (that's the "q=0.0")...I really need to read up on exactly what quanta are on wikipedia.

A quick digression into quantization...with a picture!

The intro to that article was really helpful. Apparently, quantization basically just means taking some information that has an infinite range of possible values and chopping it into a finite number of discrete values. Actually my picture used in the header of this blog is a great example of quantization. The original photo on the left has tons and tons of colors, but I've forced the header image (on the right) to use fewer than 10 colors. Quantization. Not so scary a concept from 20,000 feet.

Using mkvinfo, I can see that my output.webm is actually VP9 now. Phew! Okay, I have a sample video.

Before coming to the Recurse Center, I'd been reading up on the Matroska format to get ready to build my decoder...but total fail, that's the just the container format and contains 0% of video information. Now the awesome rust libraries I found are basically worthless...Hmm, time to rethink the project? Nope, time for boba tea and a little reflection.

Boba Interlude

It's raining and Boba was delicious. I'm just starting to skim the table of contents on the VP9 spec, and it's a little overwhelming. I've asked for help for the most excellent video-dev slack org about suitable alternative projects. Here's some ideas that have come to mind:

- Rewrite a simplified ffmpeg-like-tool that consumes libav or libvpx to transcode or decode a video

- Just read through the whole VP9 spec

- Contribute to FFMPEG/VP9/AV1 open source projects

Might be helpful to take a step back here. When I leave Recurse Center, I want to understand more about how to efficiently encode and troubleshoot videos especially those using VP9. So, there's actually a lot of value in just understanding the webm / Matroska format...There's also value in understanding how a video gets encoded using VP9 to understand the quality/filesize tradeoffs and what encoding parameters can be tuned a bit better. Actually knowing the super deep technical areas of VP9 might not be totally necessary...

I just got a great suggestion from Slack -- try to contribute to the AOM Analyzer project. I remember seeing this before -- and its really cool. However, it's really broken on Firefox (my browser of choice since 2014) and Chrome. Maybe another time I'd look at that project again, but not today.

Contributing to ffmpeg or AV1 would be super amazing, but I think I need a stronger foundation in how the codecs themselves work. FFMPEG is notoriously hard to contribute to, but I wonder if there are beginner issues for AV1...ah, nope there's only an issue tracker for AV1 and no obvious on-ramp there, although there is documentation about building and testing the project. Still seems a little too pie-in-sky.

Decision Time

Why don't I use the Matroska rust library to extract the VP9 bits of a video and try decoding the first frame first using libvpx; once I've got that working, I'll try to read the spec to do that myself...Great! This could keep me busy for at least the whole week, but I'd likely learn a lot about how the codec works and build a thing that does something with a quick win and then lets me go deeper.

The Matroska crate I've been playing with doesn't provide any access to the raw bytes, so there's no way that will work. Maybe, I could just contribute to that project a way to expose the lower level parsing logic from that crate. Wait...I should really look at that other library mkv. Yeah! THis does exactly what I want! It spits out typed events corresponding to the start and end of each EBML element as it does a single pass through the file. I'll build on top of that then!

Also, the Matroska stuff does actually seem to be super valuable. I really need to have a handle on the container format in order to find the image data for vp9 to process, and the webm project requires a lot of knowledge of the Matroska format...so it doesn't look like a waste of time after all.

Playing with libvpx

Okay, I'll just start by making a new GitLab project and pushing the little bit of code I have now there...and I'll add a README with a link to Tears of Steel source I'm working with (I would love to get a "Bars and Tones" sample video for easier sample...).

A bowl of Ramen and a karafe of sake later, it looks like the mkv crate is exactly what I want for parsing Matroska packaging. It gives me the actual binary, the offset, and an enum variant for each Matroska ID. Amazing! So, now I need to see if I can interface this with libvpx...

9:45pm -- time to head back to my apartment.

Okay, I've compiled lipvpx by hand and looked closely at the most simple decoder example. I've also quickly skimmed the vpx crate and it looks like all the necessary C functions have rust bindings. Unfortunately, none of my webm files work when I run them through the example program from libvpx. Running them through mkvalidator gives me errors on all of them, but VLC has no trouble with any of these files and ffprobe also is very happy with them.

Oops! Stupid user error on my part. Looks like that script only works on IVF files -- Today I Learned IVF means vp8. I swear I have never heard of IVF before today, and I assume it's not something I'll hear a lot about going forward. So, that script appears to have succeeded in creating a massive pile of images, but I'd love to verify. Time to download ffplay so I can follow the script that simple_decoder.c told me to run:

ffplay -f rawvideo -pix_fmt yuv420p -s 512x288 /tmp/out9-ivf

Oh man...I have ffprobe and ffmpeg, but none of the other ff tools. I can never remember the write way to install ffmpeg and get ALL the tools, so here's how:

- Go to https://ffmpeg.zeranoe.com/builds/

- Click the blue Download button

- Copy everything from the bin/ directory of the unpacked tarball into /usr/local/bin/

- Make sure it worked

which ffplay

Let's try that ffplay command again now... Success!

Open Questions

- How does VP9 fit into webm? The code sample for simple_decoder.c makes it look like libvpx is actually parsing some matroska...but running mkvalidator seems to disprove that theory. No EBML found in the file.

- What's the difference between a

Seekelement and aCuePoint? - Where does encoded audio data actually go inside a Webm file? Inside

Blocks/SimpleBlocks? - Why is timing so complex inside webm?

- How can I feed raw bytes into libvpx for vp9? Hmm, actually I know a vp9 codec core contributor...I should ask him about this!

- What can I reasonably try to accomplish tomorrow? I really think unpacking

SeekPositionsand possibly theVideoelement from the Webm file will point me to the correct places inside the file to find data to feed the vp9 codec. Maybe I'll just try to wrap my head around those elements better.